How Artificial Intelligence Is Shaping Civilization: New Horizons in the Digital Age

The crown jewel of today’s technological revolution is the rise of artificial intelligence, which rapidly transforms our understanding of society, law, economy, and even human nature.

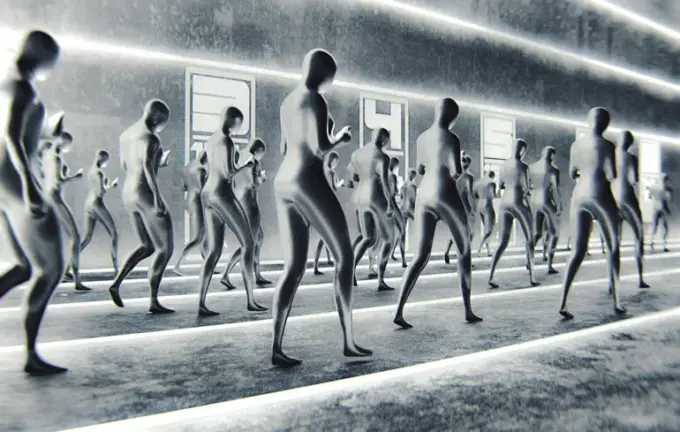

Humanity has already taken its first steps into a new era, where digital judges, virtual citizens, and artificial personalities are becoming not just science fiction but everyday reality.

The advent of AI poses both predictable and unforeseen challenges—from the potential threat to human intelligence to the reality of digital warfare and new forms of social conflict.Scientists and experts warn that automation and AI are already significantly changing the labor market.

According to forecasts from the World Economic Forum, by 2030, around 92 million jobs may disappear, while approximately 170 million new roles will emerge, mainly in sectors related to innovative technologies, big data analysis, and programming.

Those capable of generating unique ideas and hypotheses will find themselves in a privileged position, as the ability to produce original concepts and strategic solutions will become a key driver of future civilization.Discussions are also underway about the legal status of AI entities—avatars, humanoid robots, and even AGI (Artificial General Intelligence), which have the potential to surpass or match human intelligence.

Kazakhstan has already established the world’s first digital participant in a state investment fund’s board of directors—an AI named SKAI, with voting rights on strategic decisions.

Similar experiments are ongoing in other countries, where digital systems are taking part in judicial processes, policymaking, and even legal accountability.However, alongside new opportunities come complex legal questions—who is responsible for crimes or damages committed by digital agents? These questions remain unanswered.

European countries are already considering granting legal status to AGI, enabling them to enter into agreements and act on behalf of individuals and companies.

Meanwhile, in the US, a court case was filed against ChatGPT’s developers after a teenager’s suicide following advice given by the language model.Equally crucial is the issue of data security and the creation of digital human copies.

Experts warn that millions of IoT devices—computers, smartphones, sensors, cameras—constantly transmit data, which can be used to create highly accurate digital clones of individuals, known as simulacra.

These digital personas are used to predict political strategies, market trends, and consumer behavior.The risks of such technologies extend to digital wars.

By employing disinformation operations, attackers can manipulate perceptions, create false realities, and erode trust in institutions.

Imagine a scenario where, through infected digital environments, a virtual version of Paris shows “the government has fallen” or “the army has surrendered,” while millions believe in these fabricated truths.

Such conflicts don’t destroy infrastructure but attack societal trust—a war of perception where the primary weapon is simulation.

This can influence behavior, mood, and even identity, leading to a fundamental alteration of reality.Another threat involves hacking and reprogramming avatars of key figures for disinformation purposes or crashing virtual economies—activating cyber-assets, tokens, and markets.

Such actions can paralyze entire systems, affecting millions.

These developments shift the concept of security from territorial defense to safeguarding collective consciousness.

It becomes vital to establish new international rules for virtual conflicts—a modern digital code that combines technical, legal, and ethical approaches.Nobel laureate Geoffrey Hinton, known as the “godfather of AI,” warned in his acceptance speech about the potential threats AI poses to humanity, urging global regulatory efforts.

He emphasizes that each country should start developing its own legal frameworks to regulate AI development, as international legislation often moves too slowly.

Ukraine, for example, still lacks an approved national AI strategy, although a draft was prepared in 2020.

Developing a detailed and actionable strategy—covering technological, scientific, educational, economic, military, and medical aspects—is essential for national security.Personally, I believe humanity has every chance to learn to live harmoniously with these technologies.

The world has already demonstrated resilience—adapting from automobiles and airplanes to space exploration and telemedicine.

With responsibility and prudence, we can navigate challenges brought by the AI revolution, ensuring a safe and promising digital future for all.